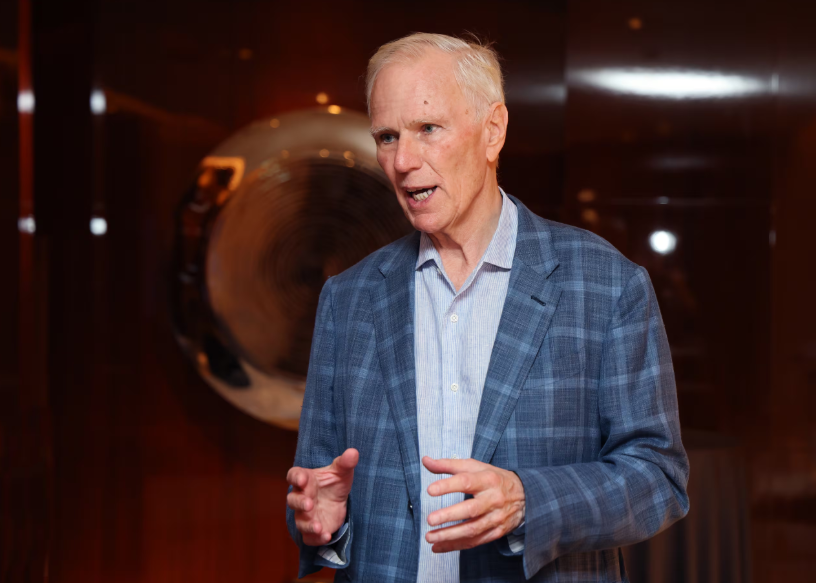

Philip Alston serves as UN Special Rapporteur on extreme poverty and human rights. He has long drawn attention to how emerging technologies from social welfare algorithms to surveillance systems create risks for human dignity and privacy. His recent warning about AI adds urgency: as AI systems grow more powerful, they threaten not just data leaks or misuse, but deeper harm to respect, autonomy, and selfhood.

How Artificial Intelligence is Challenging Human Dignity

Alston argues that AI undermines human dignity in several ways. One is through depersonalization when people become data points rather than individuals. Algorithms may make decisions about hiring, welfare, policing, or services based on statistical profiles, with little human oversight. That reduces individuals to predicted risk and can reinforce existing biases.

Another dimension involves mimicry and automation. When AI chatbots, virtual assistants, or deep-fake systems mimic human behavior so closely, people may start expecting authenticity where there is none, or delegating relational tasks care, advice, empathy to machines.

Privacy in the Age of Ubiquitous Data and Surveillance

Privacy, for Alston, is not just secrecy. AI systems gather massive data—location, behavior, preferences, biometrics and often combine them in ways people don’t know or consent to.

Surveillance systems, both public government cameras, smart policing and private advertising, corporate tracking, gain power when AI can process images, voices, and texts at scale. When combined with weak regulation or opaque algorithms, people’s behavior, expression, and even freedom of movement can become constrained. For vulnerable populations migrants, minorities, activists, these threats are sharper loss of privacy can become suppression, and loss of dignity becomes marginalization.

Current Governance Gaps and Risks That Alston Highlights

Alston points out that many legal systems have not kept pace with AI’s speed. Laws around data protection, algorithmic transparency, fairness, and human oversight often lag or have loopholes. He warns that without regulations to ensure accountability so that those building and using AI are answerable harms will multiply unseen.

He also draws attention to how commercial incentives drive technology in directions that prioritize profit or efficiency over dignity or privacy. Large corporations may deploy AI systems that gather data or profile users to optimize user engagement, target ads, or predict behavior even when those systems infringe privacy or dignity. Because the consumers often don’t fully understand what is happening, they can’t meaningfully consent or push back.

What Alston Recommends to Protect Rights, Dignity, and Privacy

Alston doesn’t simply issue warnings; he urges actionable measures. One is strengthening legal frameworks—data protection laws that require transparency, user consent, meaningful control over personal data, and severe penalties for breaches.

He also calls for algorithmic accountability: mandatory audits, rights to explanation so individuals can understand how decisions affecting them were made, and human oversight especially for high-impact systems health, law enforcement, welfare.

Recommendation involves ensuring that users have recourse ways to challenge decisions made by AI, correct errors, and seek remedy when the technology harms them. Public participation matters, too: communities should help shape how AI is used in their environments, what kinds of systems are acceptable, and where lines should be drawn.

What to Watch for in AI Regulation and Social Change

If Alston’s warnings take hold, we may see more national and international laws around AI privacy, data protection, and algorithmic fairness. The European Union’s AI Act is one example already in play; similar frameworks may emerge elsewhere.

Watch also for court cases setting precedents about identity, data ownership, and algorithmic bias. The role of civil society, journalists, and watchdog groups will become more crucial in uncovering abuses.

Kim Jong Un’s Rewards for Backing Russia in Ukraine War

Kim Jong Un’s Rewards for Backing Russia in Ukraine War  UN to Vote on Gaza Solution Without Hamas

UN to Vote on Gaza Solution Without Hamas  Postal traffic to US down by over 80% amid tariffs, UN says

Postal traffic to US down by over 80% amid tariffs, UN says  Ethiopia, UN Urge COP30 to Deliver for Africa’s Climate Ambitions

Ethiopia, UN Urge COP30 to Deliver for Africa’s Climate Ambitions  UN Grants $600,000 Emergency to Help Pakistan’s Flood Victims

UN Grants $600,000 Emergency to Help Pakistan’s Flood Victims  US joins Russia to vote against UN peace plan for Ukraine

US joins Russia to vote against UN peace plan for Ukraine